Container, Kubernetes and SR-IOV

This article is the transcript of a tech sharing in my team and assumed the audiences have already knew some background knowladge.

Container Basic

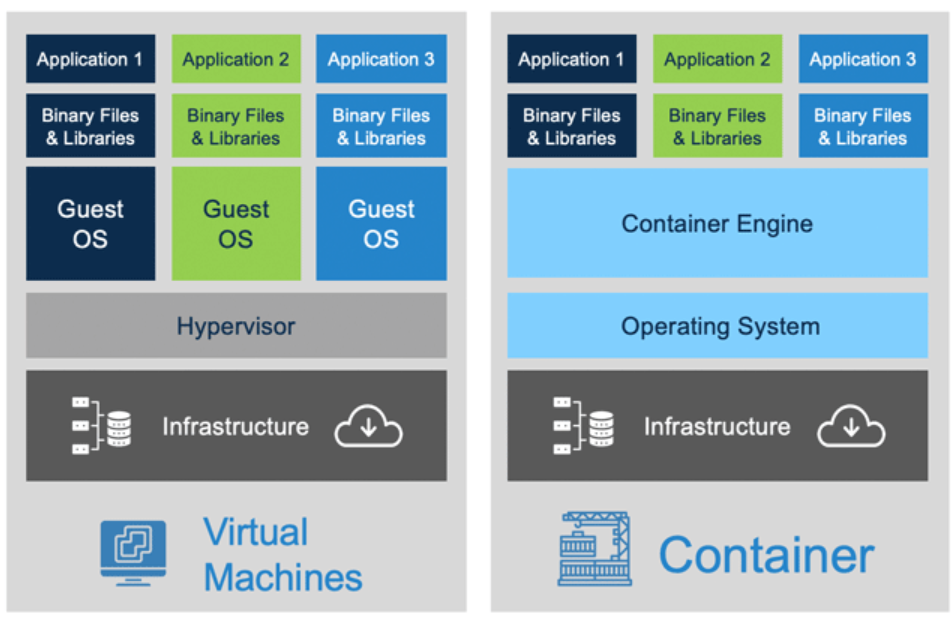

“Container is NOT virtual machine!”

Linux provides namespace and cgroup to isolate or limit resources between different process.

Container is a technology that uses namespace and cgroup (also the union file system) to create an isolated environment that can be used by a process.

Container Runtime

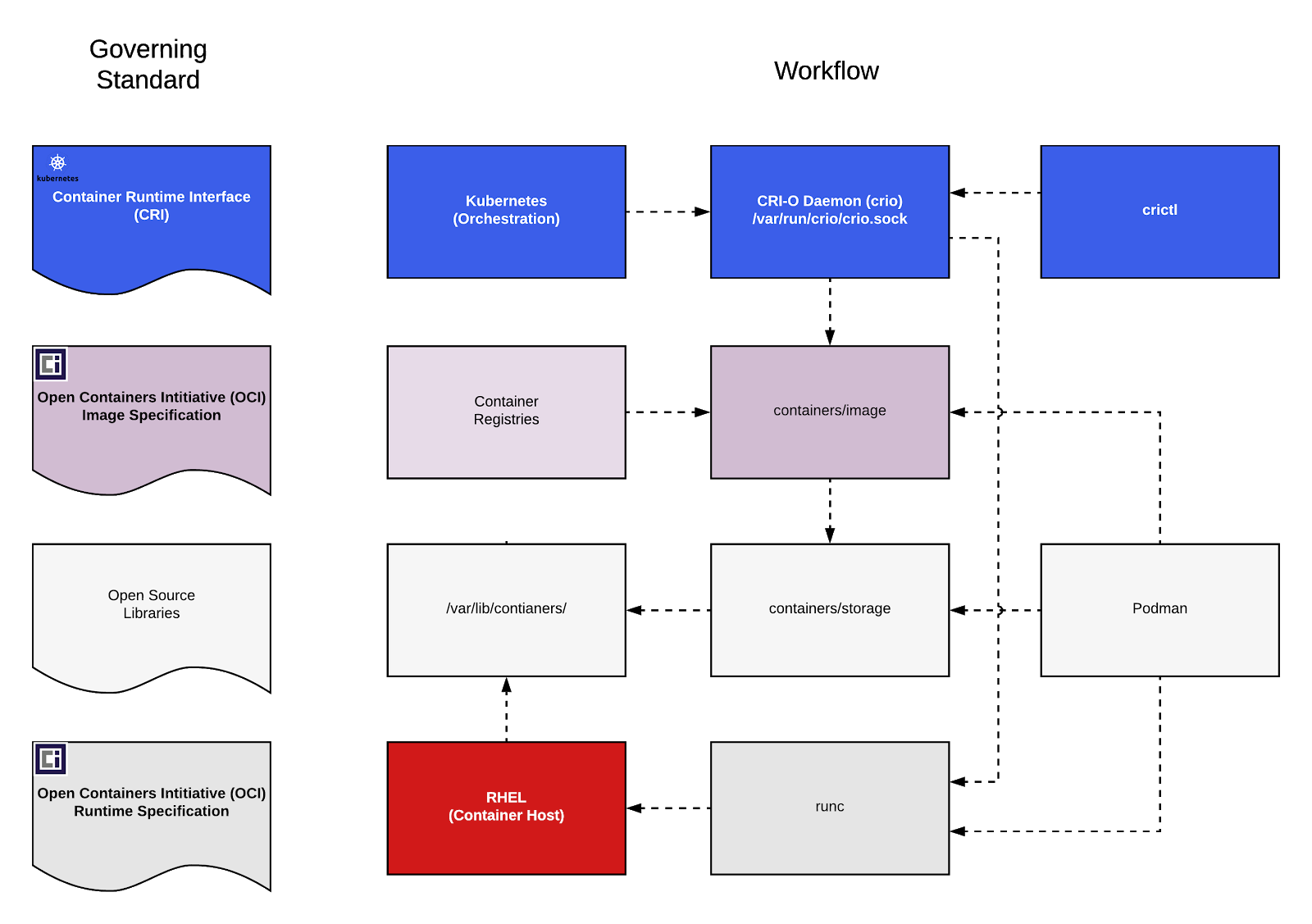

Namespace and cgroup are the mechanisms provided by Linux. The container is only a tech that uses these machines. Container Runtime is the software that actually uses the mechanisms to achieve container tech. However, different people may have different ideas about the way to use the mechanisms. So comes to the two standards, OCI (Open Container Initiative) and CRI (Container Runtime Interface)

OCI - Open Container Initiative

Docker leads the OCI. OCI defines the way to run a container, how to set up the namespace and cgroup, also includes the format of a container image, configuration, and metadata.

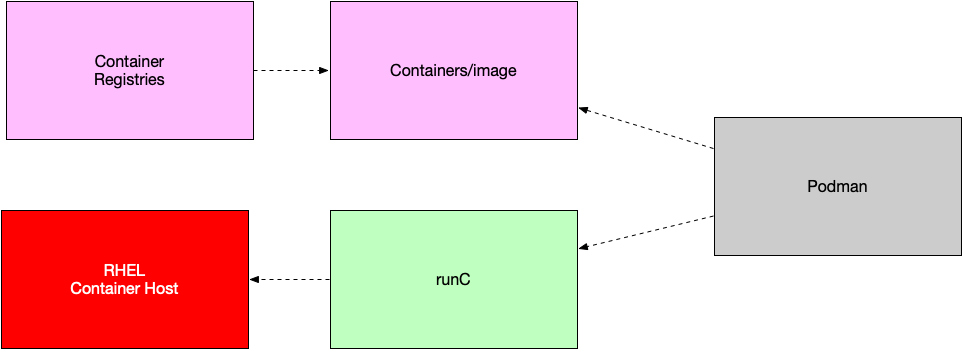

runC is a full implementation of OCI on Linux.

CRI - Container Runtime Interface

Container Runtime Interface is a container runtime standard published by Kubernetes. Kubernetes is a platform that manages and orchestrates large-scale containers. This platform combines many components, container run time is one of them. Any runtime software that achieves the CRI can be used by Kubernetes as a container runtime component. Besides CRI, Kubernetes also have standards like CNI(Container Network Interface) and CSI (Container Storage Interface)

OCI is a standard that defines how to create/run/manage a container. CRI is a standard that defines how runtime software can be used by Kubernetes.

Introducing Container Runtime Interface (CRI) in Kubernetes

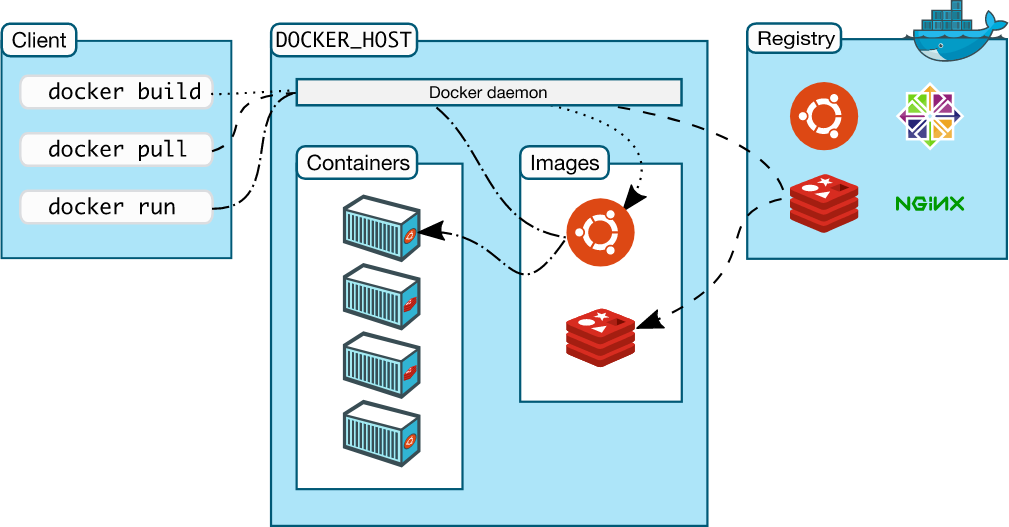

Docker

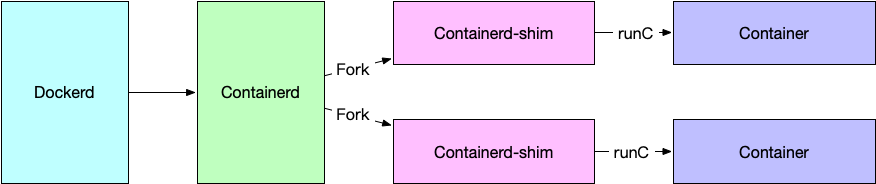

Docker is a container management tool that has features like build/pull/run container. After installing Docker, you will get at least 3 components: runC, containerd, dockerd, also include a docker cli client. The relationship between the 3 main components can be described as below chart.

- Docker CLI client send commands to the Dockerd who is the daemon of Docker.

- Dockerd then call containerd to create container.

- Containerd will fork a child process containerd-shim

- containerd-shim will use runC to create the target container.

Podman

Podman is also a container management tool. But unlike Docker needs a daemon running at the backend, Podman directly creates and manages the container. Podman also uses runC, which means it implements OCI standards.

Podman and Docker are the same when you using the cli

Cri-o & Crictl

cri-o is a container runtime published by Redhat, implementing both OCI and CRI standards. It uses OCI to communicate with runC, CRI to communicate with Kubernetes. The purpose of cri-o is not a tool like Docker or Podman but a runtime component that can be used by Kubernetes. For this reason, cri-o is actually only running as a daemon. cri-o works like a component of Kubernetes, so it has no cli interface, which means we cannot use cri-o directly from cli. But there’s also an open-source tool called crictl. crictl works as a cri-o client, but only has limited features. crictl is usually used by k8s developers as a dev tool. Cri-o achieves CRI to the north Kubernetes, achieves OCI to the south by runC.

Openshift is just a platform developed based on Kubernetes with many more advanced features. From the perspective of the low-level implementation, Openshift and Kubernetes is basically the same. It uses cri-o as it’s container runtime, which is why we use cri-o and crictl in our SR-IOV container testing.

CNI

Similar with CRI, CNI is also a standard published by Kubernetes. Instead of defining how to be a container runtime component, CNI defines how to config and use the container network. CNI is made up by some network api standard and library. CNI only focus on configure the network resource when creating the container and releasing the network resource when deleting the container.

Currently, CNI has already Kubernetes built-in component.

CNI Plugin

CNI plugin must be an executable file in a certain path. This file will be called by Kubernetes to insert the network port to the container namespace (like one point of a veth pair) and also do necessary change on the host (like connect the other point of a veth pair to bridge), and then assign IP to the port and route.

SR-IOV CNI Plugin

🕹️ Example with SR-IOV CNI Plugin

|

|

How does the Openshift create SR-IOV network?

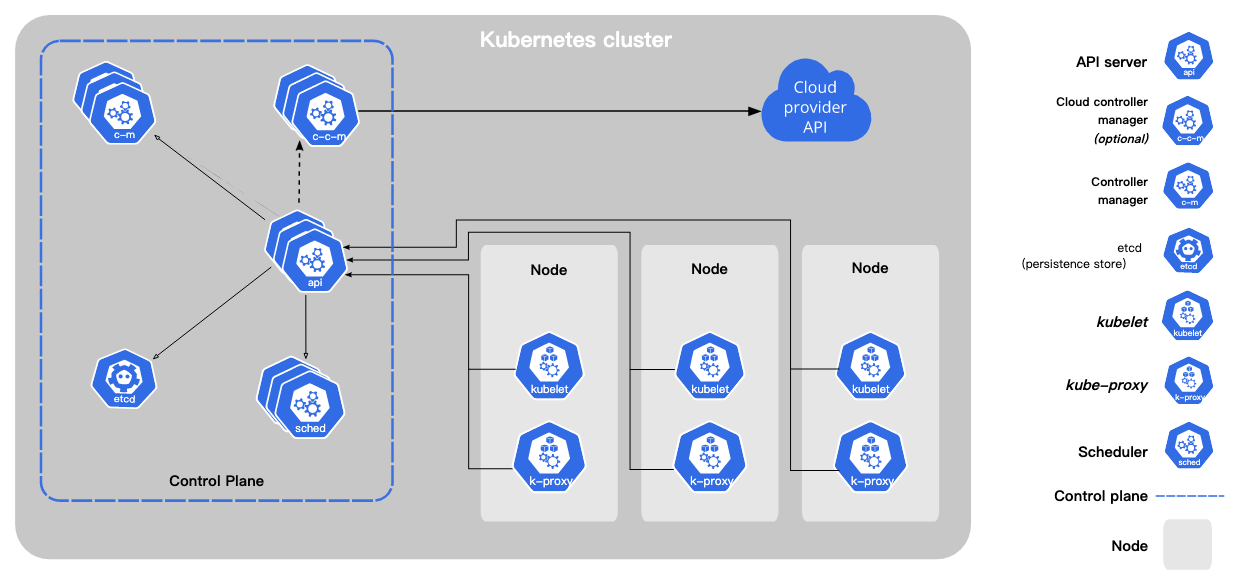

Openshift/Kubernetes Basic Concepts

Control plane: Where the brain of Kubernetes is, make decision, schedule container/jobs

Worker plane(node): Where the actual container is running, worker node use kubelet to communicate with control node.

The concept of control node and worker node is similar to the control plane and data plane in SDN.

How to operate Openshift/Kubernetes

The way to config such container platform is to describe the state of the cluster, like the way we use in Ansible. And submit this yaml file to the k8s backend.

|

|

In a yaml file like this, we will define what our application needs. So if our application needs SR-IOV function, we will also define it in such yaml file.

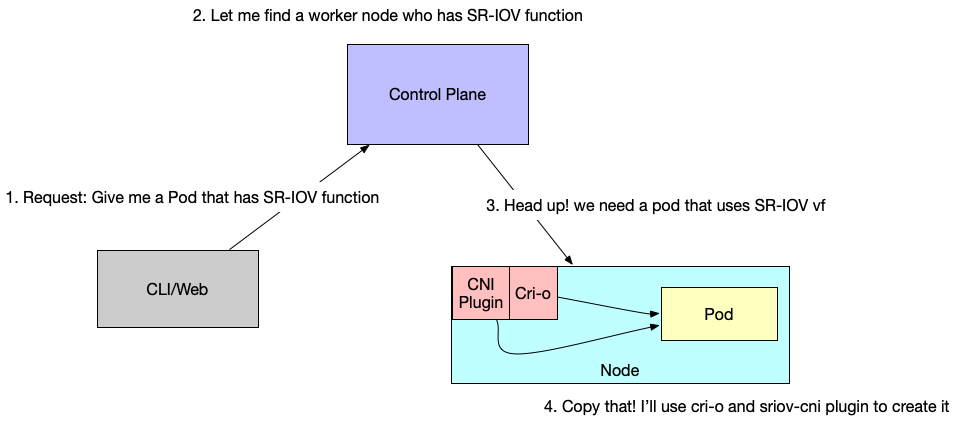

🎯After submitting such yaml file to the openshift control plane, openshift will find the worker node who has the SR-IOV function, and schedule the pod to it. Then CNI plugin will do its work.

⁉️How does the openshift know which worker node has the SR-IOV function?

Openshift has a thing called operator, it’s actually a customized controller. SR-IOV has its SR-IOV operator. This operator will run a deamonSet on every worker node to init and register the SR-IOV function to the control node. Then when a Pod needs SR-IOV function, the controller will know where to schedule it. After the Pod is scheduled to this worker node, the operator will call the CNI to setup the container’s network.

CNI won’t config the actually network resource like SR-IOV, it just move the network resource into the container. Operator will create the corresponding network function.

So the process will be like:

- Create SR-IOV network operator

- Operator create deamonSet on worker node.

- daemonSet and operator initiate the SR-IOV function and report to the openshift control node

- openshift receive a request to create a Pod with SR-IOV VF

- openshift pick up SR-IOV function worker node from its database, and schedule the Pod to the certain worker node

- Worker node call SR-IOV CNI Plugin to configure container network and IP/route

Script to create and run a container that use SR-IOV

Steps for using SR-IOV in container against a RHEL baremetal simulation enviroment.

- Install crio, crictl

- pull container image

- install CNI plugin

- create CNI configuration

- create Pod configuration

- create Pod

- create container, put it into pod

- verify the container is using sriov vf

- send some traffic to check more.

MORE: What is Pod and why we use it.

In a system like Kubernetes or Openshift, Pod is the thing that we normally deal with, not container. Pod is a group of container that share one network, storage and hostname. It’s just like a logical host. Containers in a Pod are like processes on this host.

Why use Pod?

- In production, we normally have the env that different process who depend on each other. The recommendation is put process in its own container. So in this case, it will need a way to combine a group of contaienr — Pod

- If one of the containers from a service group die, how will we define the state of other container in the same group?

- The containers in a Pod share the same network namespace and storage, which will be more convenient for the same service.

Pod is also a container?

The way to implement Pod is to create a container and run “pause” command inside the container. All the service container of the pod will inherit the namespace of “pause” container. When we create and delete a pod, we actually create and delete a pause container.